Feedback

ETL with CrateDB¶

ETL / data pipeline applications and frameworks for transferring data in and out of CrateDB.

Apache Airflow / Astronomer¶

Apache Airflow is an open source software platform to programmatically author, schedule, and monitor workflows, written in Python. Astronomer offers managed Airflow services on the cloud of your choice, in order to run Airflow with less overhead.

Airflow has a modular architecture and uses a message queue to orchestrate an arbitrary number of workers. Pipelines are defined in Python, allowing for dynamic pipeline generation and on-demand, code-driven pipeline invocation.

Pipeline parametrization is using the powerful Jinja templating engine. To extend the system, you can define your own operators and extend libraries to fit the level of abstraction that suits your environment.

See also

Managed Airflow

Astro is the best managed service in the market for teams on any step of their data journey. Spend time where it counts.

Astro runs on the cloud of your choice. Astro manages Airflow and gives you all the features you need to focus on what really matters – your data. All while connecting securely to any service in your network.

Create Airflow environments with a click of a button.

Protect production DAGs with easy Airflow upgrades and custom high-availability configs.

Get visibility into what’s running with analytics views and easy interfaces for logs and alerts. Across environments.

Take down tech-debt and learn how to drive Airflow best practices from the experts behind the project. Get world-class support, fast-tracked bug fixes, and same-day access to new Airflow versions.

Apache Flink¶

Apache Flink is a framework and distributed processing engine for stateful computations over unbounded and bounded data streams, written in Java.

Flink has been designed to run in all common cluster environments, perform computations at in-memory speed and at any scale. It received the 2023 SIGMOD Systems Award.

Apache Flink greatly expanded the use of stream data-processing.

Managed Flink

A few companies are specializing in offering managed Flink services.

Aiven offers their managed Aiven for Apache Flink solution.

Immerok Cloud’s offering is being converged into Flink managed by Confluent.

Apache Kafka¶

Apache Kafka is an open-source distributed event streaming platform used by thousands of companies for high-performance data pipelines, streaming analytics, data integration, and mission-critical applications.

See also

Managed Kafka

A few companies are specializing in offering managed Kafka services. We can’t list them all, see the overview about more managed Kafka offerings.

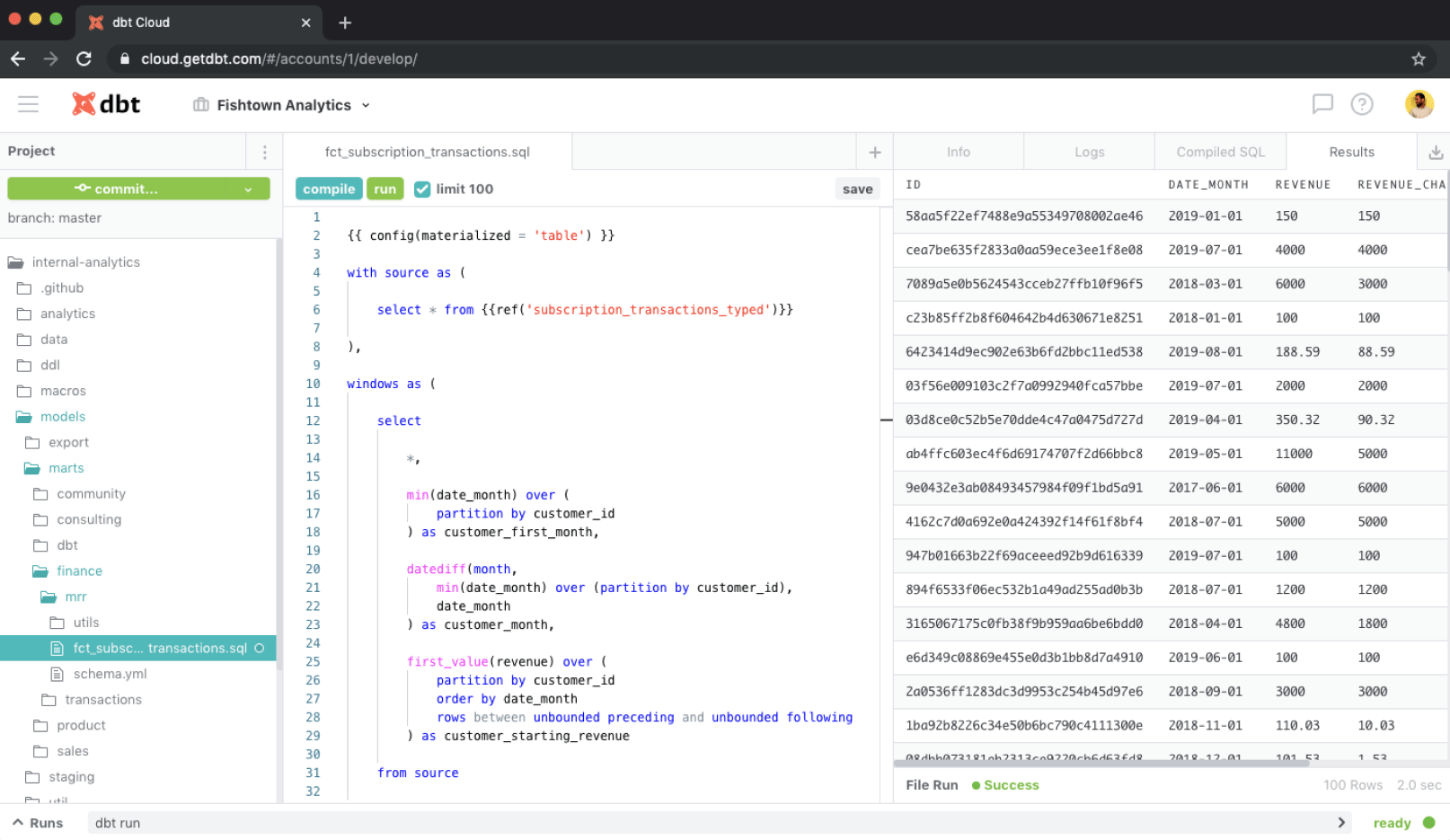

dbt¶

dbt is an open source tool for transforming data in data warehouses using Python and SQL. It is an SQL-first transformation workflow platform that lets teams quickly and collaboratively deploy analytics code following software engineering best practices like modularity, portability, CI/CD, and documentation.

dbt enables data analysts and engineers to transform their data using the same practices that software engineers use to build applications.

With dbt, anyone on your data team can safely contribute to production-grade data pipelines.

The idea is that data engineers make source data available to an environment where dbt projects run, for example with Debezium or with Airflow. Afterwards, data analysts can run their dbt projects against this data to produce models (tables and views) that can be used with a number of BI tools.

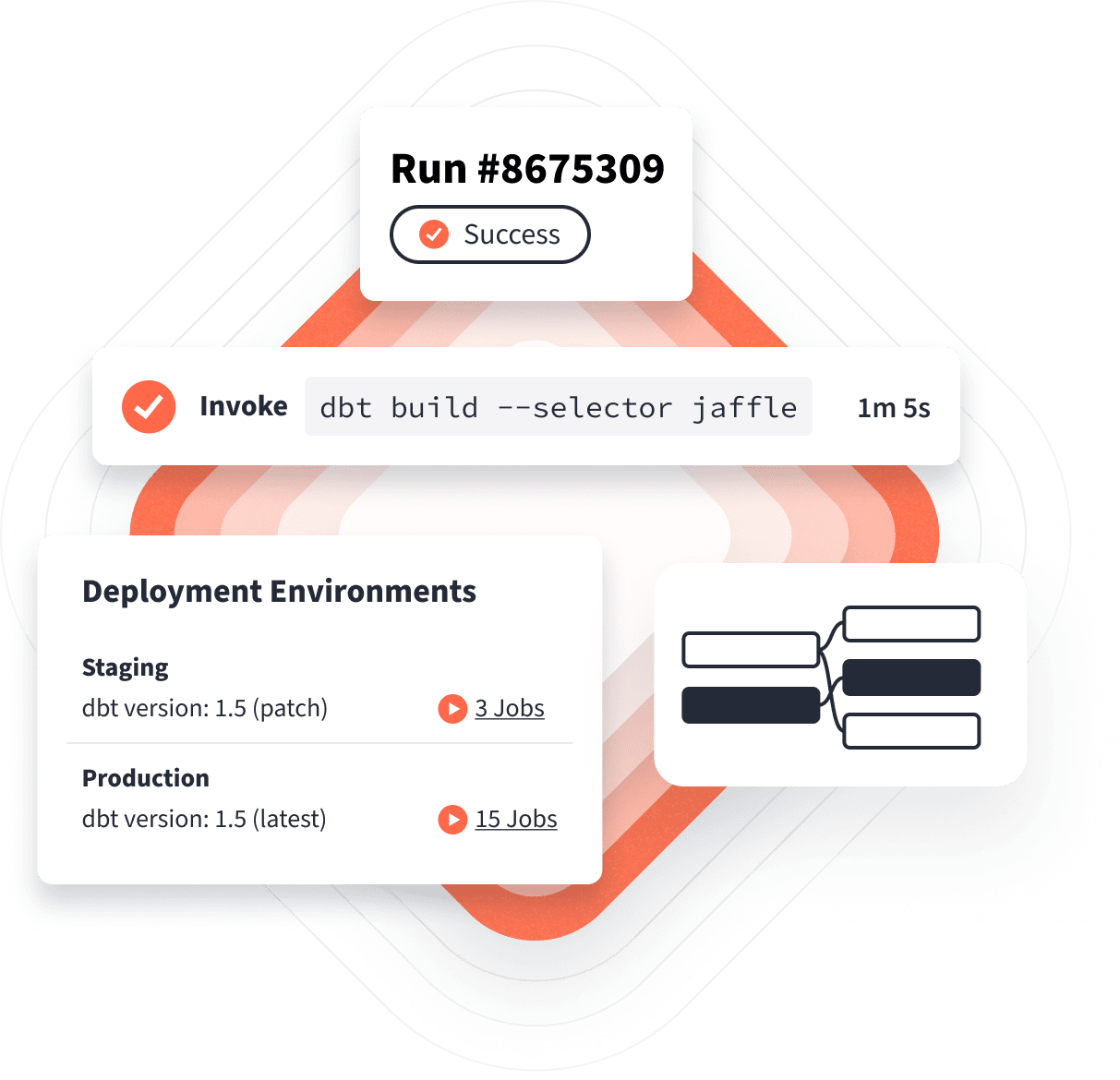

Managed dbt

With dbt Cloud, you can ditch time-consuming setup, and the struggles of scaling your data production. dbt Cloud is a full-suite service that is built for scale.

Start building data products quickly using the dbt Cloud IDE with integrated security and governance controls.

Schedule, deploy, and monitor your data products using the scalable and reliable dbt Cloud Scheduler.

Help your data teams discover and reuse data products using hosted docs or integrations with the powerful Discovery API.

Extend your workflow beyond dbt Cloud with 30+ seamless integrations covering a range of use cases across the Modern Data Stack, from observability and data quality to visualization, reverse ETL, and much more.

Ship more high-quality data and scale your development like the 1000s of companies that use dbt Cloud. They’ve used its convenient and collaboration-friendly interface to eliminate the bottlenecks that keep growth limited.

Debezium¶

Debezium is an open source distributed platform for change data capture. After pointing it at your databases, you are able to subscribe to the event stream of all database update operations.

Kestra¶

Kestra is an open source workflow automation and orchestration toolkit with a rich plugin ecosystem. It enables users to automate and manage complex workflows in a streamlined and efficient manner, defining them both declaratively, or imperatively using any scripting language like Python, Bash, or JavaScript.

Kestra comes with a user-friendly web-based interface including a live-updating DAG view, allowing users to create, modify, and manage scheduled and event-driven flows without the need for any coding skills.

Plugins are at the core of Kestra’s extensibility. Many plugins are available from the Kestra core team, and creating your own is easy. With plugins, you can add new functionality to Kestra.

See also

Node-RED¶

Node-RED is a programming tool for wiring together hardware devices, APIs and online services within a low-code programming environment for event-driven applications. It allows orchestrating message flows and transformations through an intuitive web interface.

It provides a browser-based editor that makes it easy to wire together flows using the wide range of elements called “nodes” from the palette that can be deployed to its runtime in a single-click.

See also

Managed Node-RED

With FlowFuse, and FlowFuse Cloud, essentially unmanaged and managed DevOps for Node-RED, you can reliably deliver Node-RED applications in a continuous, collaborative, and secure manner.

Collaborative Development: FlowFuse adds team collaboration to Node-RED, allowing multiple developers to work together on a single instance. With FlowFuse, developers can easily share projects, collaborate in real-time and streamline workflow for faster development and deployment.

Manage Remote Deployments: Many organizations deploy Node-RED instances to remote servers or edge devices. FlowFuse automates this process by creating snapshots on Node-RED instances that can be deployed to multiple remote targets. It also allows for rollback in cases where something might not have gone correctly.

Streamline Application Delivery: FlowFuse simplifies the software development lifecycle of Node-RED applications. You can now set up DevOps delivery pipelines to support development, test and production environments for Node-RED application delivery, see Introduction to FlowFuse.

Flexible FlowFuse Deployment: We want to make it easy for you to use FlowFuse, so we offer FlowFuse Cloud, a managed cloud service, or a self-hosted solution. You have the freedom to choose the deployment method that works best for your organization.

Singer / Meltano¶

Singer is a composable open source ETL framework and specification, including powerful data extraction and consolidation elements. Meltano is a declarative code-first data integration engine adhering to the Singer specification.

Meltano Hub is the single source of truth to find any Meltano plugins as well as Singer taps and targets.

SQL Server Integration Services¶

Microsoft SQL Server Integration Services (SSIS) is a component of the Microsoft SQL Server database software that can be used to perform a broad range of data migration tasks.

SSIS is a platform for data integration and workflow applications. It features a data warehousing tool used for data extraction, transformation, and loading (ETL). The tool may also be used to automate maintenance of SQL Server databases and updates to multidimensional cube data.

Integration Services can extract and transform data from a wide variety of sources such as XML data files, flat files, and relational data sources, and then load the data into one or more destinations.

Integration Services includes a rich set of built-in tasks and transformations, graphical tools for building packages, and an SSIS Catalog database to store, run, and manage packages.