Columnar databases are a cornerstone of modern analytics. By storing data column by column rather than row by row, they minimize I/O and excel at scanning large datasets and performing aggregations at scale. This makes them a natural fit for dashboards, reporting, and business intelligence.

If you are looking for a clear definition and architectural overview, see our guide on columnar databases.

But as analytics moves closer to operational systems, a new challenge emerges. Teams increasingly want to run analytical queries on data that is continuously arriving, not hours or minutes later. In practice, many traditional columnar databases struggle with real-time analytics, not because columnar storage is flawed, but because their architectures were designed for a different world.

Columnar Databases Were Built for Batch Analytics

Most columnar databases originated in a batch-oriented analytics environment.

Their design assumptions typically include:

-

data is loaded in large chunks

-

datasets are mostly immutable once written

-

queries prioritize throughput over latency

-

freshness is measured in minutes or hours, not seconds

Under these conditions, columnar storage shines. Data can be heavily compressed, organized into large segments, and scanned efficiently during analytical queries. Indexing, optimization, and storage layout are tuned for stable data rather than constant change.

This model works extremely well for historical analysis and scheduled reporting. It becomes much harder to sustain when data never stops arriving.

Real-Time Analytics Changes the Rules

Real-time analytics introduces fundamentally different requirements.

In practice, real-time analytics means:

-

continuous ingestion of new data

-

queries that must include the most recent events

-

low and predictable query latency

-

concurrent reads and writes on the same dataset

These requirements create tension with traditional columnar architectures. The system must ingest data quickly, make it queryable immediately, and still preserve the performance benefits of columnar storage.

For many columnar databases, this is where the cracks start to show.

Where Traditional Columnar Architectures Break Down

Write amplification and ingestion pressure

Columnar storage is optimized for reading large, contiguous blocks of data. Writing small increments of data efficiently is much harder. As a result, many systems buffer incoming data and periodically reorganize it into columnar segments.

This leads to write amplification. A small amount of incoming data can trigger expensive background work to maintain compression and storage layout.

Indexing and segment creation latency

To achieve fast query performance, columnar databases often rely on segment-level metadata, indexing, or sorting. These structures are typically built during batch ingestion or compaction phases.

When data arrives continuously, the system must choose between:

-

delaying query visibility until segments are finalized

-

exposing partially optimized data and degrading query performance

Neither option is ideal for real-time use cases.

Query performance vs freshness trade-offs

Many systems implicitly trade freshness for speed. Queries run fast, but only on data that has already been compacted or indexed. Recent data may be excluded, partially visible, or handled through slower code paths.

This creates uneven performance and unpredictable latency, especially when queries span both historical and fresh data.

Why “Near Real-Time” Is Often a Compromise

To manage these challenges, many columnar databases adopt micro-batching. Incoming data is grouped into small batches, ingested periodically, and made queryable after a short delay. Vendors often describe this as “near real-time.”

While this approach works for some use cases, it introduces compromises:

-

data is not immediately queryable

-

freshness depends on batch size and configuration

-

latency varies based on ingestion cycles

-

operational complexity increases

For monitoring, alerting, and user-facing analytics, these delays can be significant.

What a Real-Time Columnar System Needs Instead

Supporting real-time analytics on columnar storage requires architectural choices beyond the storage format itself.

Key requirements include:

-

continuous ingestion without heavy compaction cycles

-

immediate queryability of newly ingested data

-

distributed execution that scales reads and writes together

-

minimal reliance on manual tuning or pre-aggregation

In other words, real-time columnar analytics is less about columnar storage in isolation and more about how the system integrates ingestion, storage, and query execution.

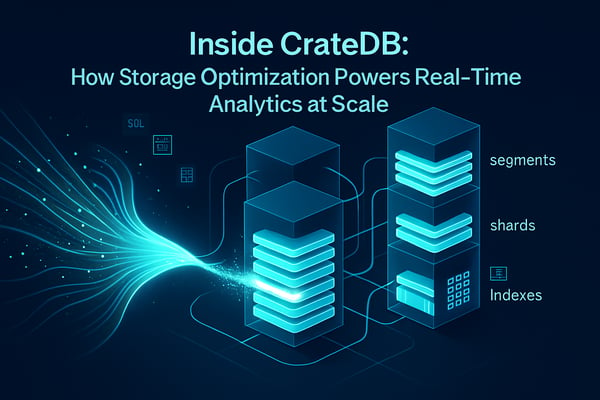

How CrateDB Approaches Real-Time Columnar Analytics

CrateDB was designed with real-time analytics as a primary use case rather than an extension of batch analytics.

It uses columnar storage while supporting continuous ingestion and immediate queryability. Newly ingested data becomes available for analytical queries without requiring batch loading, pre-aggregation, or delayed indexing phases.

By combining distributed SQL execution with a columnar engine, CrateDB allows teams to run analytical queries across both fresh and historical data in the same system. This makes columnar analytics usable for operational dashboards, monitoring systems, and data-driven applications where latency and freshness matter.

When a Traditional Columnar Database Is Still the Right Choice

Despite these limitations, traditional columnar databases remain an excellent choice for many scenarios.

They are often ideal when:

-

analytics is primarily historical

-

data arrives in predictable batches

-

query latency can tolerate delays

-

cost efficiency is prioritized over freshness

Batch-oriented BI and reporting workloads continue to benefit from mature columnar systems optimized for scan performance and compression.

Choosing the Right Columnar Architecture

Columnar storage is a powerful foundation for analytics, but it does not automatically make a system suitable for real-time workloads. Architecture matters as much as storage format.

Understanding the trade-offs between batch-oriented and real-time columnar systems helps teams choose the right tool for their use case, rather than assuming that all columnar databases behave the same way.

If you are evaluating columnar databases for real-time analytics, the key question is not whether the system uses columnar storage, but whether it was designed to operate on continuously changing data.

Interested in learning? Click here to discover CrateDB's capabilities >