As data volumes grow and applications demand instant responses, search can no longer live on a single node. From log analytics and IoT platforms to observability and real-time dashboards, organizations increasingly rely on distributed search engines to query massive datasets with low latency.

But while distributed search solves part of the problem, modern workloads demand more than keyword matching. They require fast aggregations, complex filters, joins, and real time analytics on constantly changing data.

This article explores what distributed search engines are, why they exist, where they struggle, and how search is converging with real time analytics in modern data architectures.

What Is a Distributed Search Engine?

A distributed search engine is a system that indexes and queries data across multiple nodes instead of relying on a single machine.

Key characteristics include:

- Data partitioned across shards

- Parallel query execution

- Replication for availability and fault tolerance

- Horizontal scalability by adding nodes

When a query is executed, it is sent to multiple nodes simultaneously, each processing its local data. Results are then merged and returned to the user.

This architecture allows search systems to scale far beyond the limits of a single server, both in terms of data volume and query throughput.

Why Distributed Search Exists

Distributed search engines emerged to address several fundamental challenges:

Data scale: Logs, metrics, events, and machine generated data grow continuously. Centralized search systems struggle to index and query terabytes or petabytes of data efficiently.

Low latency requirements: Search is often part of operational workflows. Whether it is troubleshooting incidents or powering user facing applications, results must be returned in milliseconds.

Availability and resilience: Modern systems must tolerate hardware failures without downtime. Distribution and replication help ensure continuous availability.

Concurrent access: Many users and systems may query the same data at the same time. Parallel execution improves throughput and responsiveness.

These requirements made distributed architectures the default for search at scale.

Where Traditional Distributed Search Engines Struggle

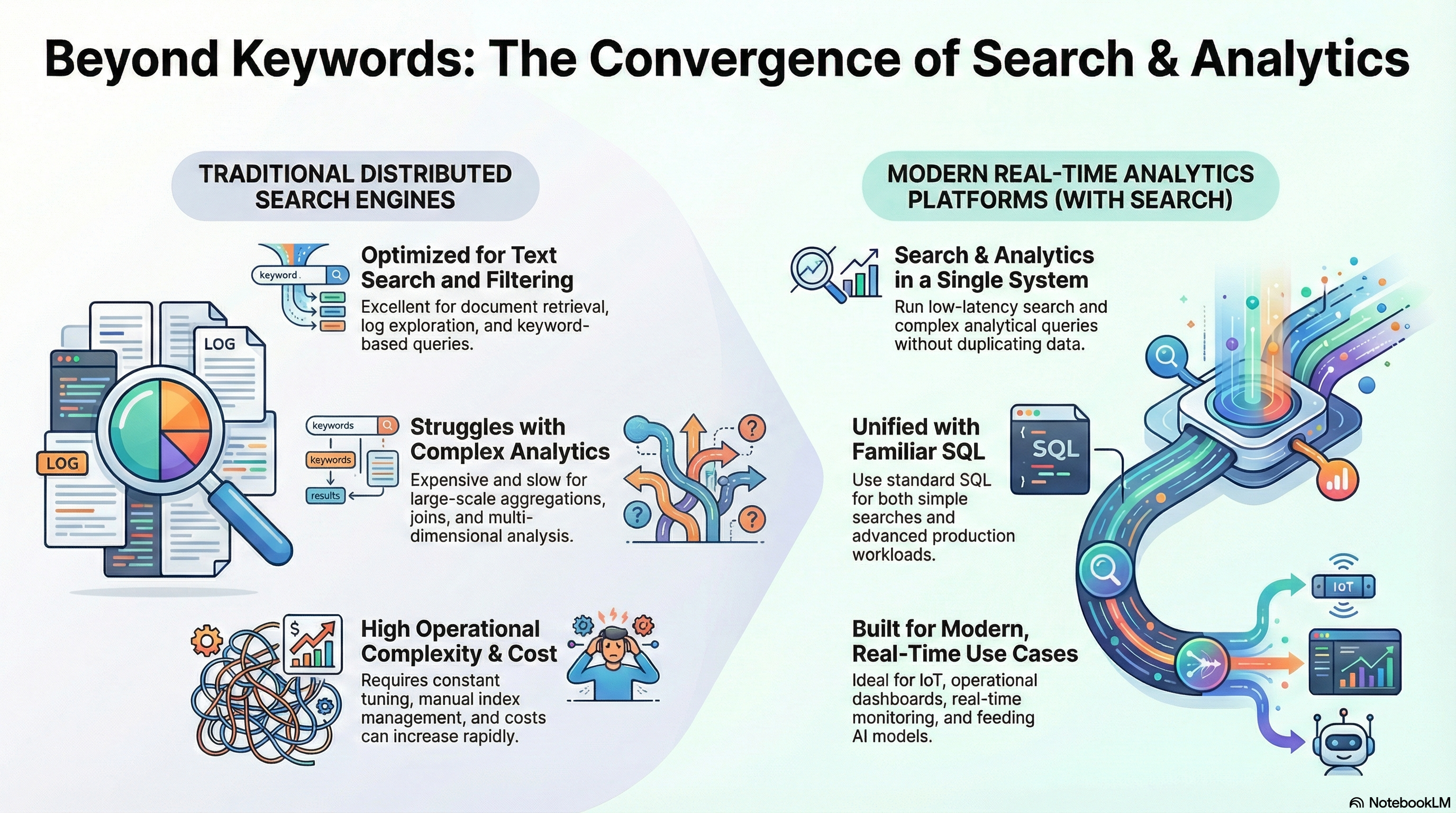

While distributed search engines are excellent at text search and filtering, they often hit limitations when workloads evolve beyond pure search.

Complex aggregations: Search engines are optimized for inverted indexes and relevance scoring. Large scale aggregations across many dimensions can become expensive and slow, especially when data cardinality is high.

Analytical queries: Business and operational analytics require grouping, time window analysis, statistical functions, and multi dimensional exploration. These patterns are not always a natural fit for search first systems.

Joins and relationships: Many real world datasets are relational. Modeling relationships and performing joins is difficult or inefficient in most search engines.

Operational complexity: Distributed search clusters require ongoing tuning. Shard sizing, index lifecycle management, rebalancing, and performance optimization often demand constant attention.

Cost predictability: As data grows, costs can increase rapidly due to replication, storage overhead, and compute intensive query patterns.

These challenges become more visible as organizations move from simple search use cases to real time analytics and decision making.

The Convergence of Search and Real Time Analytics

Modern data platforms increasingly blur the line between search and analytics.

Typical workloads now combine:

- Full text and structured search

- Time series analysis

- Aggregations over live data streams

- Geospatial queries

- SQL based exploration

- Feeding downstream systems such as dashboards and AI models

In these scenarios, search is no longer the final goal. It is one capability among many required to extract value from data in real time. This shift is driving a move away from search only architectures toward platforms that treat search and analytics as first class citizens.

Architectural Approaches to Distributed Search

There is no single right approach. The best architecture depends on workload complexity and business requirements.

Search first architectures: Optimized for text search and relevance scoring. Best suited for document retrieval, log exploration, and keyword based queries. Limitations appear when analytics complexity increases.

Analytics first architectures: Designed for fast aggregations, joins, and analytical queries across large datasets. Search capabilities exist but are integrated into a broader analytical engine. Better suited for operational analytics, monitoring, and real time decision making.

Hybrid approaches: Some organizations combine search engines with analytics databases. While flexible, this introduces data duplication, synchronization challenges, and higher operational overhead.

The trend is moving toward fewer systems that can do more, rather than stitching together many specialized tools.

Distributed Search in a Real Time Analytics Database

Modern real time analytics databases embed distributed search capabilities directly into their query engine. This approach allows teams to:

- Run search and analytics in a single system

- Use SQL for both exploratory queries and production workloads

- Analyze structured, semi structured, and time series data together

- Scale horizontally without manual index tuning

- Support low latency queries on continuously ingested data

Instead of treating search as a standalone engine, it becomes part of a unified analytical platform.

How CrateDB Approaches Distributed Search

CrateDB approaches distributed search from an analytics first perspective. Rather than being built primarily as a search engine, CrateDB is a distributed real time analytics database where search is a native capability of the query engine. Data is automatically distributed across nodes, indexed on ingest, and immediately available for both search and analytical queries.

This means teams can:

- Search and filter high volume data using familiar SQL syntax

- Run fast aggregations and time based queries on the same dataset

- Combine search with joins, geospatial queries, and JSON fields

- Analyze live and historical data without separate indexing pipelines

Because CrateDB is designed for continuously changing data, it removes the need to predefine rigid schemas or manually tune indexes for every new query pattern. As data evolves, queries continue to perform without re-architecture or downtime.

CrateDB is commonly used in scenarios where distributed search alone is not enough, such as:

- IoT and sensor analytics

- Log and event analytics

- Operational dashboards

- Real time monitoring and alerting

- Feeding AI and machine learning pipelines with fresh data

When Does This Approach Make Sense?

A distributed analytics platform with search capabilities is a strong fit when:

- Search is combined with aggregations and analytics

- Data arrives continuously from streams or sensors

- Queries evolve frequently and cannot be pre optimized

- Operational simplicity and cost predictability matter

- Results must feed dashboards, alerts, or AI systems in real time

Pure search engines still excel at document retrieval and relevance driven use cases. But as soon as analytics becomes central, their limitations become visible.

Conclusion

Distributed search engines solved the problem of search at scale. But modern data workloads demand more than distributed indexing and keyword matching.

As search, analytics, and real time decision making converge, organizations increasingly look for platforms that combine these capabilities in a single, scalable system.

The future of distributed search is not search alone, but search as part of a real time analytics platform built for fast insights, operational simplicity, and continuous data.

To go further, discover how CrateDB manages distributed search alongside real-time analytics.

![CrateDB is a Perfect [Rockset] Replacement](https://cratedb.com/hs-fs/hubfs/undefined-Jul-14-2024-08-58-13-7644-PM.png?width=600&name=undefined-Jul-14-2024-08-58-13-7644-PM.png)