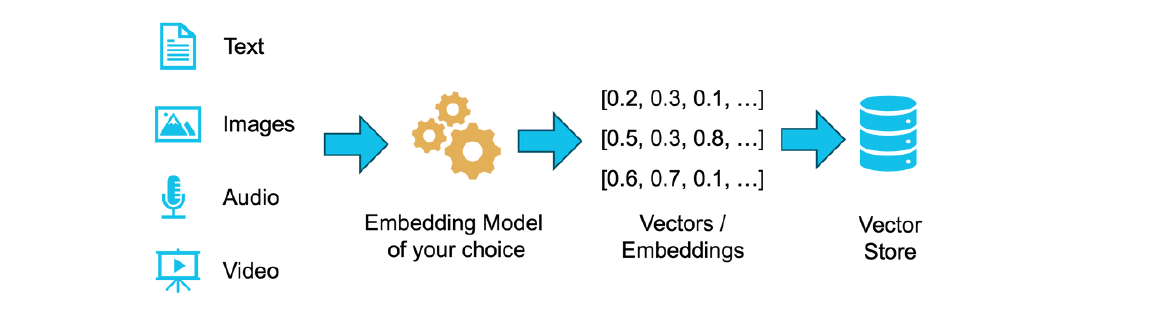

In the context of Generative AI, multimodal vector embeddings are getting more popular. No matter the kind of source data—text, images, audio, or video—an embedding algorithm of your choice is used to translate the given data into a vector representation. This vector comprises numerous values, the length of which can vary based on the algorithm used. These vectors, along with chunks of the source data, are then stored in a vector store.

Vector databases are ideal for tasks such as similarity search, natural language processing, and computer vision. They provide a structured way to comprehend intricate patterns within large volumes of data. The process of integrating this vector data with CrateDB is straightforward, thanks to its native SQL interface.

CrateDB offers a FLOAT_VECTOR(n) data type, where you specify the length of the vector. This creates an HNSW (Hierarchical Navigable Small World) graph in the background for efficient nearest neighbour search. The KNN_MATCH function executes an approximate K-nearest neighbour (KNN) search and uses the Euclidean distance algorithm to determine similar vectors. You just need to input the target vector and specify the number of nearest neighbours you wish to discover.

The example below illustrates the creation of a table with both a text field and a 4-dimension embedding field, the record insertion into the table with a simple INSERT INTO command, and the usage of the KNN_MATCH function to perform a similarity search.

CREATE TABLE word_embeddings ( text STRING PRIMARY KEY, embedding FLOAT_VECTOR(4) );

INSERT INTO word_embeddings (text, embedding) VALUES ('Exploring the cosmos', [0.1, 0.5, -0.2, 0.8]), ('Discovering moon', [0.2, 0.4, 0.1, 0.7]), ('Discovering galaxies', [0.2, 0.4, 0.2, 0.9]), ('Sending the mission', [0.5, 0.9, -0.1, -0.7]);

SELECT text, _score FROM word_embeddings WHERE knn_match(embedding, [0.3, 0.6, 0.0, 0.9], 2) ORDER BY _score DESC;

| text | _score | |---------------------|-----------| | Discovering galaxies| 0.917431 | | Discovering moon | 0.909090 | | Exploring the cosmos| 0.909090 | | Sending the mission | 0.270270 |

The example below shows you how to search for data similar to 'Discovering Galaxy' in your table. For that, you use the KNN_MATCH function combined with a sub-select query that returns the embedding associated to 'Discovering Galaxies'.

SELECT text, _score FROM word_embeddings WHERE knn_match(embedding, (SELECT embedding FROM word_embeddings WHERE text ='Discovering galaxies'), 2) ORDER BY _score DESC;

| text | _score | |---------------------|-----------| | Discovering galaxies| 1 | | Discovering moon | 0.952381 | | Exploring the cosmos| 0.840336 | | Sending the mission | 0.250626 |

Combining Vectors, Source and Contextual Information

Combining your vector data (vectorized chunks of your source data) with the original data and some additional contextual information is very powerful.

As we will outline in this chapter, JSON payload offers the most flexible way to store and query your metadata information. A typical table schema would contain a FLOAT_VECTOR column for the embedding and a OBJECT column to contain the source and contextual information.

In the example below, the table contains a FLOAT_VECTOR column with 1536 dimensions. If you are using multiple embedding algorithms, you can add new columns with a different vector length value.

CREATE TABLE input_values ( source OBJECT(DYNAMIC), embedding FLOAT_VECTOR(1536) );

In an INSERT statement, you can simply put your existing JSON data, such as a chunk of text extracted from a PDF file or any other information source. Then, you use your preferred algorithm to generate an embedding, which is inserted into the table. If subsequent source data pieces have different annotations, context information, or metadata, you can simply add it to your JSON document, which is automatically updated as new columns in the table.

INSERT INTO input_values (source, embedding) VALUES ( '{ "id": "chunk_001", "text": "This is the first chunk of text. It contains some information that will be vectorized.", "metadata": { "author": "Author A", "date": "2024-03-15", "category": "Education" }, "annotations": [ { "type": "keyword", "value": "vectorized" }, { "type": "sentiment", "value": "neutral" } ], "context": { "previous_chunk": "", "next_chunk": "chunk_002", "related_topics": ["Data Processing", "Machine Learning"] } }' [1.2, 2.1, ..., 3.2] -- Embedding created by your favorite algorithm

Adding Filters to Similarity Search

You can also add query filters to your similarity search easily.

The example below shows you how to search for similar text snippets in the 'Education' category. CrateDB’s flexibility allows you to use any other filters as per your needs, such as geospatial shapes, making it adaptable to your specific use case requirements.

SELECT source['id'], source['text'] FROM input_values WHERE knn_match(embedding,?,10) -- Embedding to search AND source[’metadata’][’category’] = ‘Education’ ORDER BY _score DESC LIMIT 10;